The other day I was searching for a particular email file in an Maildir on one of our mail servers. Knowing only the From address, I thought I'd use grep. Unfortunately it failed and gave me an error: /bin/grep: Argument list too long. Here is how to overcome this grep error...

/bin/grep: Argument list too long

"Argument list too long" indicates there are too many arguments into a single command which hits the ARG_MAX limit. How to grep through a large number of files? For example:

grep -r "example\.com" *-bash: /bin/grep: Argument list too long

Well, this was no wonder, since there are over 190500 files in that particular Maildir:

ls -1 | wc -l190516

There are several ways of overcoming this error message. One, for instance is:

find . -type f | xargs grep "example\.com"

A second option to overcome the error is: substitute the "*" (asterisk) with a "." (dot), like:

grep -r "example\.com" .

In newer versions of grep you can omit the ".", as the current directory is implied.

Did you know you can use grep on Windows? This comes in handy for forensic log parsing and analysis on Windows Server IIS.

Argument list too long when deleting files in Bash (Bonus!)

Resolve: /bin/rm: Argument list too long.

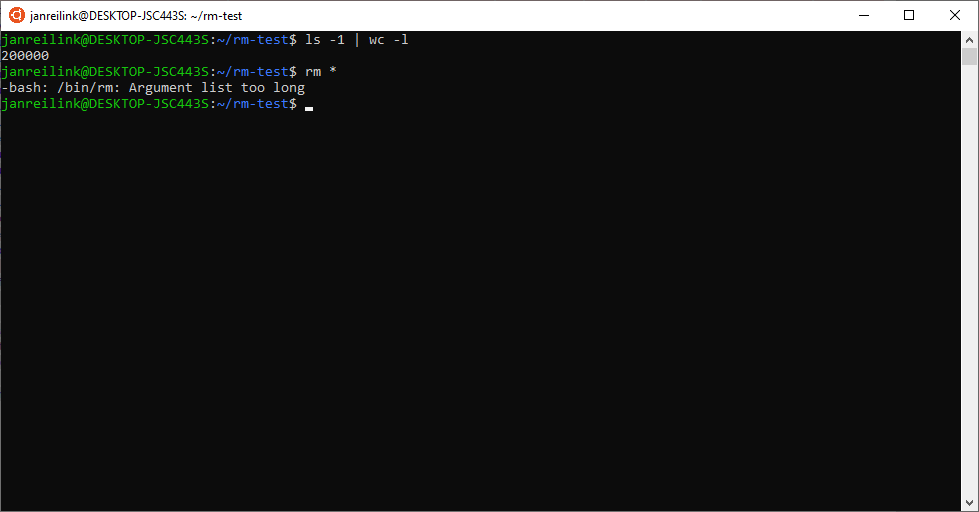

If you're trying to delete a lot of files in Bash, this may also fail with an "Argument list too long" error message:

~/rm-test$ rm *-bash: /bin/rm: Argument list too long

"Argument list too long” indicates when a user feeds too many arguments into a single command which hits the ARG_MAX limit. The ARG_MAX defines the maximum length of arguments to the exec function. Other commands subject to this limitation are commands such as rm, cp, ls, mv, and so on. (thanks Hayden James).

Generate a random alphanumeric string in Bash

There are a couple of commands you can use to resolve this error and to remove those many thousands files. In my following examples, I have a dir ~/rm-test with 200.000 files, that I'll use (and I'll time the commands for speed measurement).

time find . -type f -delete

real 0m2.484s

user 0m0.120s

sys 0m2.341s

time ls -1 | xargs rm -f

real 0m2.988s

user 0m0.724s

sys 0m2.375s

mkdir emptytime rsync -a --delete empty/ rm-test/

real 0m2.880s

user 0m0.170s

sys 0m2.642s

Or remove the entire directory:

time rm -r rm-test/

real 0m2.776s

user 0m0.220s

sys 0m2.510s

In Linux, you can recreate that directory with the mkdir command if you still need it. Create 200.000 small test file with: dd if=/dev/urandom bs=1024 count=200000 | split -a 4 -b 1k - file.

As you can see, the timing results don't differ that much. In this test, using find with -delete is the fastest solution, followed by rm -Rf. The slowest is using ls-1 | xargs rm -f. The downside of rsync is that 1) it is CPU heavy, and 2) you need to create an empty directory first.